Physical Computing

Gen AI

Computer Visions

APIs

Creative Technology

Treating code, hardware, and machine intelligence as raw design materials, I develop functional prototypes to explore the tangible future of human-computer interaction.

Highlights

Tangible Interaction

Prototyped a tangible interface exploring haptic feedback and motion using a custom-built sphere with a vibration motor and gyroscope. Developed with C++ (Arduino) and JavaScript to transmit sensor data via JSON for real-time interaction.

Computer Vision

Investigated social interaction patterns using computer vision and TensorFlow to track and analyze human poses in real-time. This experiment focused on interpreting non-verbal cues and behavioral dynamics through machine learning.

More-Than-Human Sensing Game

Led an educational workshop introducing children to sensing technologies through a playful prototype equipped with vibration motors. Designed to spark curiosity about how animals 'feel' the world, fostering early engagement with tangible interfaces

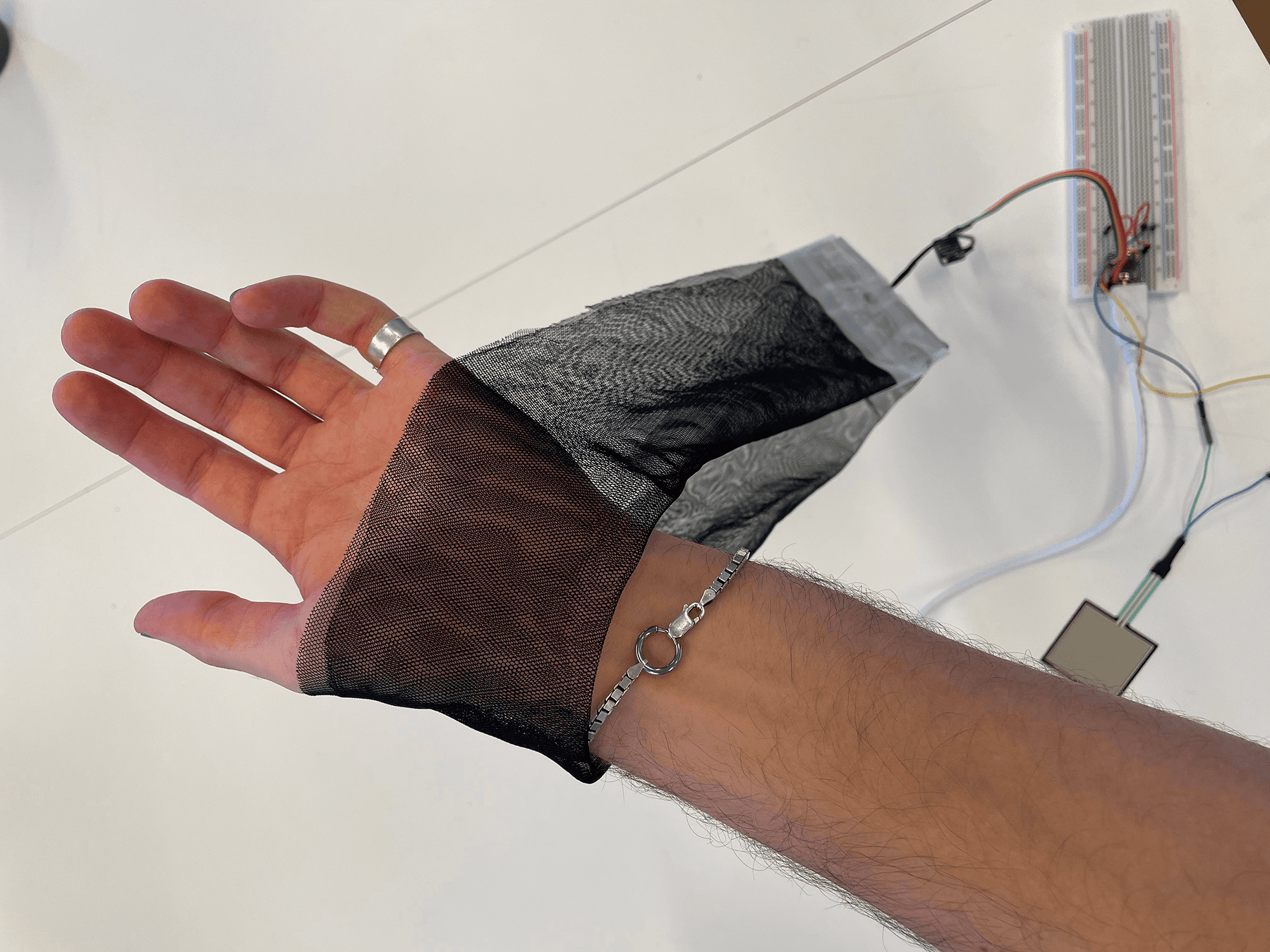

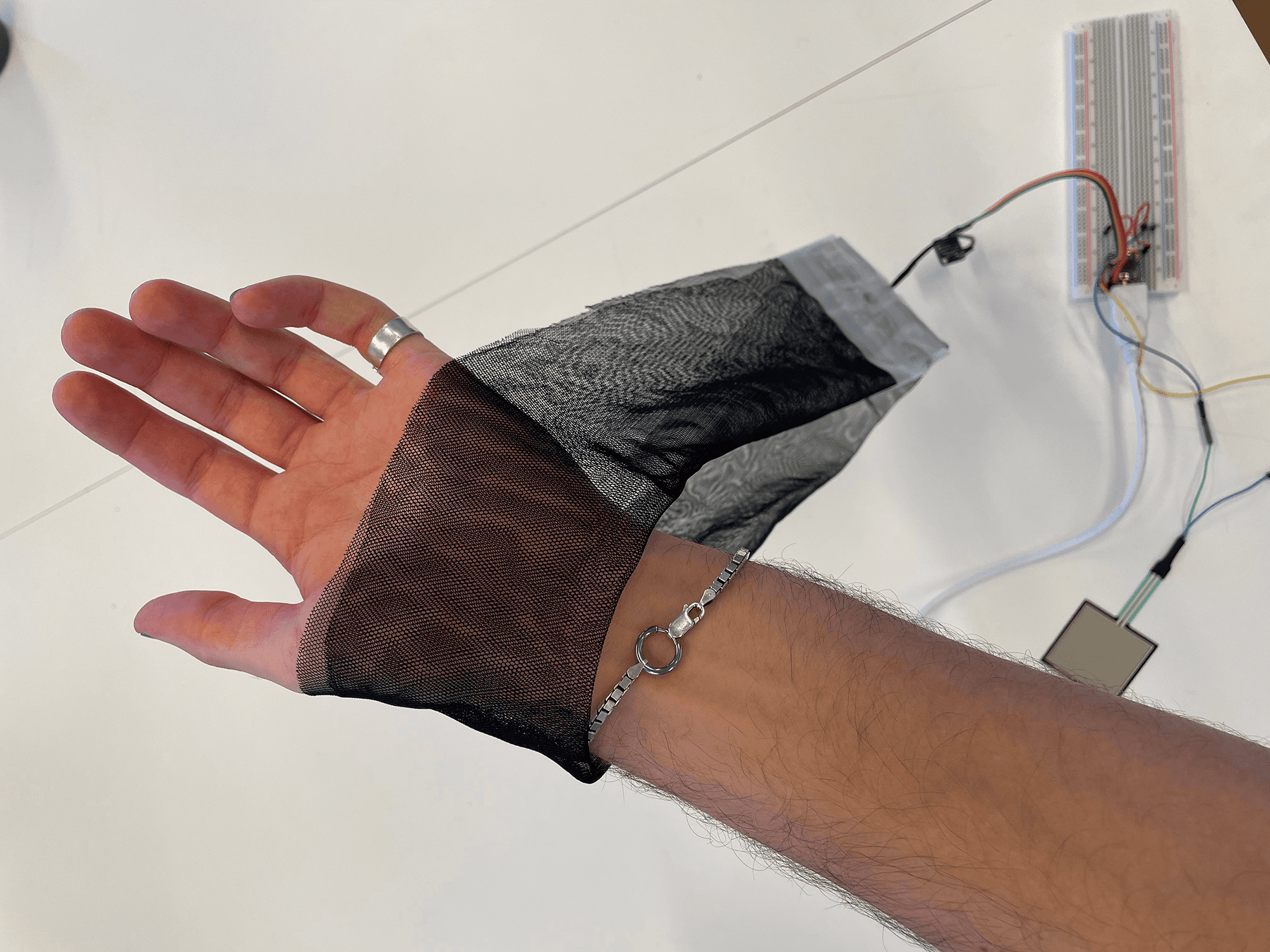

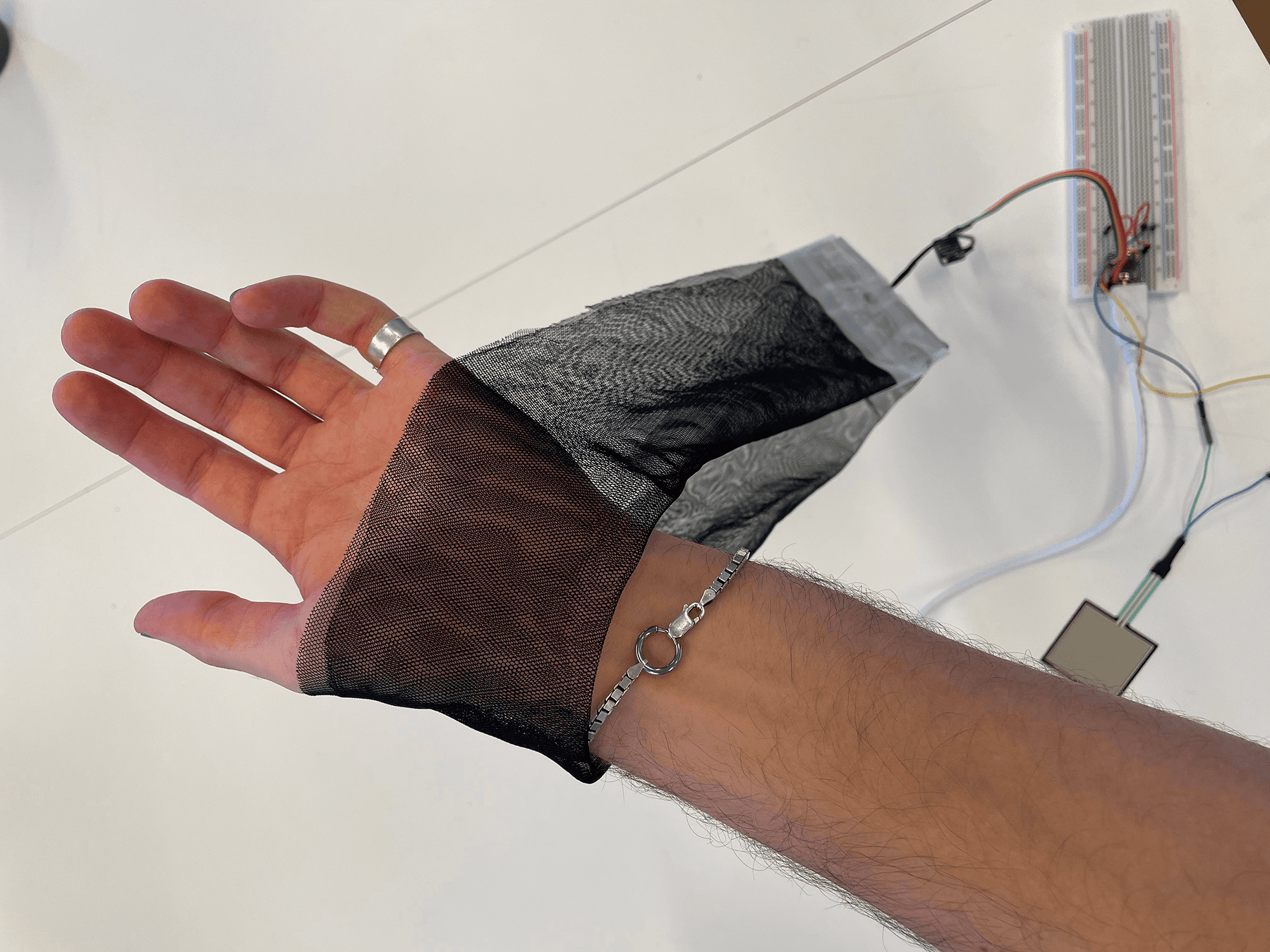

Emergent Haptics

Explored emergent haptics and interactivity in IoT devices using pressure sensors to trigger complex haptic feedback loops. Programmed using JavaScript and C++ to investigate how simple inputs can create rich, tactile output patterns

AI in Co-Design

Developed a voice-enabled co-design tool that acts as an active participant in design sessions using the OpenAI API, Whisper (speech-to-text), and text-to-speech. This application contextualizes conversations to facilitate human-AI collaboration in creative workflows.

Plant-Data Visualization

Created a proof-of-concept installation that reads organic bio-signals from a plant using EKG sensors. The system processes the raw data into real-time sound and visualizations using JavaScript, exploring the translation of biological life into digital signals.

Physical Computing

Gen AI

Computer Visions

APIs

Creative Technology

Treating code, hardware, and machine intelligence as raw design materials, I develop functional prototypes to explore the tangible future of human-computer interaction.

Highlights

Tangible Interaction

Prototyped a tangible interface exploring haptic feedback and motion using a custom-built sphere with a vibration motor and gyroscope. Developed with C++ (Arduino) and JavaScript to transmit sensor data via JSON for real-time interaction.

Computer Vision

Investigated social interaction patterns using computer vision and TensorFlow to track and analyze human poses in real-time. This experiment focused on interpreting non-verbal cues and behavioral dynamics through machine learning.

More-Than-Human Sensing Game

Led an educational workshop introducing children to sensing technologies through a playful prototype equipped with vibration motors. Designed to spark curiosity about how animals 'feel' the world, fostering early engagement with tangible interfaces

Emergent Haptics

Explored emergent haptics and interactivity in IoT devices using pressure sensors to trigger complex haptic feedback loops. Programmed using JavaScript and C++ to investigate how simple inputs can create rich, tactile output patterns

AI in Co-Design

Developed a voice-enabled co-design tool that acts as an active participant in design sessions using the OpenAI API, Whisper (speech-to-text), and text-to-speech. This application contextualizes conversations to facilitate human-AI collaboration in creative workflows.

Plant-Data Visualization

Created a proof-of-concept installation that reads organic bio-signals from a plant using EKG sensors. The system processes the raw data into real-time sound and visualizations using JavaScript, exploring the translation of biological life into digital signals.

Physical Computing

Gen AI

Computer Visions

APIs

Creative Technology

Treating code, hardware, and machine intelligence as raw design materials, I develop functional prototypes to explore the tangible future of human-computer interaction.

Highlights

Tangible Interaction

Prototyped a tangible interface exploring haptic feedback and motion using a custom-built sphere with a vibration motor and gyroscope. Developed with C++ (Arduino) and JavaScript to transmit sensor data via JSON for real-time interaction.

Computer Vision

Investigated social interaction patterns using computer vision and TensorFlow to track and analyze human poses in real-time. This experiment focused on interpreting non-verbal cues and behavioral dynamics through machine learning.

More-Than-Human Sensing Game

Led an educational workshop introducing children to sensing technologies through a playful prototype equipped with vibration motors. Designed to spark curiosity about how animals 'feel' the world, fostering early engagement with tangible interfaces

Emergent Haptics

Explored emergent haptics and interactivity in IoT devices using pressure sensors to trigger complex haptic feedback loops. Programmed using JavaScript and C++ to investigate how simple inputs can create rich, tactile output patterns

AI in Co-Design

Developed a voice-enabled co-design tool that acts as an active participant in design sessions using the OpenAI API, Whisper (speech-to-text), and text-to-speech. This application contextualizes conversations to facilitate human-AI collaboration in creative workflows.

Plant-Data Visualization

Created a proof-of-concept installation that reads organic bio-signals from a plant using EKG sensors. The system processes the raw data into real-time sound and visualizations using JavaScript, exploring the translation of biological life into digital signals.

More Projects